With health systems under increasing pressure, having access to timely, reconciled, robust data is critical. As a DSCRO provider and host for the National Commissioning Data Repository (NCDR), NHS Arden & GEM Commissioning Support Unit (CSU) processes almost a third of the nation’s health data.

In 2016, the CSU’s business intelligence service recognised that a number of data flows weren’t being processed as quickly and as effectively as they could be, with an overreliance on manual manipulation.

Our team of developers redesigned and rebuilt the entire data management process to ensure it was fully automated. This has improved data quality, increased processing and reporting speed, released analytical capacity back into the service and increased transparency for customers.

The challenge

The CSU’s business intelligence service was reliant upon a manually intensive process in order to manage data submission and reporting, including SLAM data from providers, SUS and other national datasets such as MHSDS and IAPT. Raw data files had to be manually processed (with analysts on 24/7 standby to handle any potential issues) with patient confidential data manually removed.

The management team was concerned this approach took too long and also removed valuable analytical capacity from the service. In addition the current system infrastructure had reached full capacity and wasn’t operating optimally.

Ultimately, this impacted customers, as there was a delay in producing monthly reports to support crucial business processes such as contract management.

Our approach

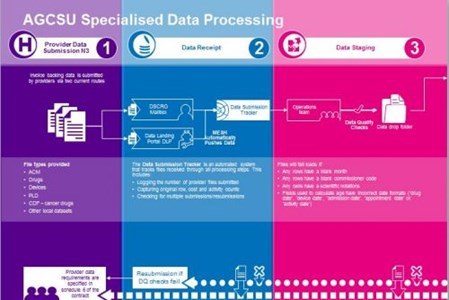

In order to increase data quality and processing speed, our team of developers redesigned and rebuilt the entire end to end data management process.

This included creation of a data portal and making innovative use of existing technology such as the parallel data warehouse.

The new fully automated end to end process:

- streamlines receipt of data from providers into the DSCRO

- automatically maps files into an upgraded parallel data warehouse

- pseudonymises any patient confidential data, assigning unique patient identifiers

- checks data quality

- transfers files into the GEMIMA data management environment

- publishes data into the reporting suite.

In addition, the process now instantly identifies data failures and sends a notification to the team so they can investigate.

All changes within the data warehouse are fully compliant with Information Governance guidelines and protocols.

The outcomes

The impact of the new process, which has been in place since April 2017, has been hugely positive for both internal and external customers.

The key improvements delivered include:

- Data is now processed much more quickly – when a file is received from a provider it can be loaded into our data warehouse within minutes.

- There is a reduction in the amount of time that is then taken for data to be released to analysts – this happens automatically three times a day at 7am, 11am and 3pm.

- The amount of data the system can handle has been dramatically increased – we have recently been able to onboard an additional 80 providers in one area with no impact on processing times. Hundreds of files can be uploaded and processed within seconds rather than days.

- Customers now have a much greater visibility over the processing of their data – which can be tracked live through our business intelligence systems.

- A series of dynamic Tableau dashboards are quickly released.

- Key reporting on contract monitoring, information schedule compliance and data reconciliation is provided in a timely manner.

- A dedicated pricing portal allows contract leads to make changes to local pricing where in year changes are made or renegotiated.

These improvements will continue to deliver long term benefits for customers. As the process is now fully automated, significant amounts of analytical capacity are being released back into the business intelligence service.

What next?

Based on stakeholder feedback, the team has already identified areas for future improvement, including:

- Migrating further data sets, including local data and risk stratification data, into the new process

- Implementing additional pseudonymisation processes to continue to meet national guidelines

- Improving national and local data quality by building data quality principles into contracts and reporting on compliance

- Actively encouraging use of our national and local data submission portals to reduce reliance on email.

The ability to onboard large data volumes within very short timeframes will also benefit the delivery of Sustainability and Transformation Partnerships (STPs), where reporting needs to take place across new and larger geographies.

Our business intelligence team continues to work closely with clients to look at ways to standardise datasets and improve data quality so that all commissioners can benefit from the increased processing speeds and robust reporting delivered as part of this project.

More on this service